What you’ll learn:

- Shortcomings of frame-based sensors like LiDAR.

- 由于其超低潜伏期,基于事件的传感器的表现如何优于基于框架的表亲。

- What is VoxelFlow?

Leading autonomous-vehicle (AV) companies have mostly been relying on frame-based sensors, such as LiDAR. However, the tech has historically struggled to detect, track, and classify objects fast and reliably enough to prevent many corner-case collisions, like a pedestrian suddenly appearing from behind a parked vehicle.

Frame-based systems certainly play a part in the advancement of AVs. But considering the harrowingstatistics这表明所有坠机事故中有80%和所有近磨碎的65%涉及撞车事故的最后三秒钟内驾驶员的注意力不集中,该行业需要努力朝着更快,更可靠的系统努力,以解决短途范围内的安全问题’re most likely to see a collision.

框架传感器具有角色,但在AV技术方面,它不应该是一个人的表演。输入基于事件的传感器,在自动驾驶系统中开发的技术为先进的技术提供,为汽车提供了增强的安全措施,而LiDAR跌落不足。

How Does LiDAR Fall Short?

LIDAR或光检测和范围是AV空间中最突出的基于框架的技术。它使用无形的激光束来扫描对象。当您将对象与人眼进行比较时,激光雷达的扫描和检测能力非常快。但是,在AV的宏伟计划中,当这是生死攸关的问题时,具有先进的感知处理算法和最先进的感知过程的激光雷达系统在30至40米以上的距离都足够了。尽管如此,当驾驶员最有可能崩溃时,它们在该范围内的行为不够快。

Generally, automotive cameras operate at30 frames per second(fps),它引入了每帧33毫秒的过程延迟。为了准确检测行人并预测他或她的路径,每帧需要多次通过。这意味着导致的系统可能需要数百毫秒的行动,但是驾驶60公里/小时的车辆仅在200毫秒内行驶3.4米。在一个特别密集的城市环境中,延迟的危险加剧了。

LiDAR, along with today’s camera-based computer-vision and artificial-intelligence navigation systems, are subject to fundamental speed limits of perception because they use this frame-based approach. To put it simply, a frame-based approach is too slow!

Many existing systems actually use cameras and sensors that aren’t much stronger than what comes standard iniPhones, which produce a mere 33,000 light points per frame. A faster sensor modality is needed if we want to significantly minimize process delay and more robustly and reliably support the “downstream” algorithm processing made up of path prediction, object classification, and threat assessment. What’s needed is a new, complementary sensor system that’s 10X better at target generating an accurate 3D depth map within 1 ms—not tens of milliseconds.

Aside from LiDAR’s limitations to react fast enough, the camera systems are often very costly. Ranging from a couple thousand to hundreds of thousands of dollars, the cost of a LiDAR system often falls on the end user. In addition, because the technology is constantly being developed and updated, new sensors are constantly hitting the market at premium prices.

LiDAR systems also aren’t the most aesthetically pleasing pieces of equipment, a major concern when you consider that they’re often applied to luxury vehicles. The system needs to be packaged somewhere with a free line of sight to the front of the vehicle, which means it’s typically placed in a box on top of the vehicle's roof. Do you really want to be driving around in your sleek, new sports car, only to have a bulky box attached to the top of it?

成本和美学肯定是激光雷达系统的缺点,但最终,基本问题是这些系统的分辨率和缓慢的扫描速率使得无法区分固定灯柱和运行的孩子。所需的是一个比Lidar提供的数万个要点数量的系统。实际上,点密度应为数千万。

基于事件的传感器正在推动AVS的未来

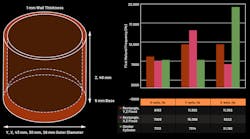

LiDAR struggles within 30 to 40 meters—event-based sensors thrive in that range. Event-based sensors, such as the VoxelFlow technology currently being developed by Terranet, will be able to classify dynamic moving objects at extremely low latency using very low computational power. This produces 10 million 3D points per second, as opposed to only 33,000, and the result is rapid edge detection without motion blur(见图)。基于事件的传感器的超低延迟可确保车辆可以解决角色箱以制动,加速或转向在车辆前突然出现的物体。

基于事件的传感器将是一项关键的技术,它可以使下一代1-3级高级驾驶员辅助系统(ADAS)安全性能,同时还实现了真正自主L4-L5 AV系统的希望。基于事件的传感器,例如Voxelflow,由分布在车辆中的三个事件图像传感器和一个位于中心的连续激光扫描仪组成,该激光扫描仪提供了一个密集的3D地图,其过程延迟显着减少,以满足自主系统的实时限制。

基于事件的传感器系统会自动且连续校准以处理冲击,振动和模糊性,同时还提供了高级驾驶员辅助系统(ADAS)和AV系统所需的所需的角度和范围分辨率。与LiDAR系统相比,它在大雨,雪和雾中还表现良好,与LIDAR系统相比,由于反向散射过多,在这些条件下会降解。

Because LiDAR is the industry standard and has been proven to work well at ranges beyond 40 meters—even though it costs a fortune—event-based sensors will be able to complement the technology, as well as other radar and camera systems. It will enable the raw sensor data from these sensors to efficiently be overlaid with event-based sensor’s 3D mesh map. This will significantly improve detection and most importantly, enhance safety and reduce collisions.

在不同的开发阶段,我们希望明年在车辆上看到基于事件的传感器的原型。从那里开始,我们需要将系统化并使其较小,以使其满足当今驾驶员的苦行性期望。但是,毫无疑问,基于事件的传感器将很快成为AV系统的必要组件。