Arm通过推出新的Neoverse CPU核心,将在数据中心市场上加大对Intel和AMD的压力,该核心将作为新的服务器处理器的蓝图。

Arm said the Neoverse N2 core, previously code-named Perseus, pumps out up to 40% more performance in terms of instructions per clock due to microarchitecture upgrades and the move to the 5-nanometer process. Arm said it offers more performance within the same area and power budget as its previous generation N1 core, tuned for the 7-nanometer node.

该公司声称,与英特尔和AMD的竞争对手筹码相比,它以较低的成本提供行业领先的性能,这两者都使用X86指令集体系结构。ARM基础设施业务副总裁兼总经理克里斯·贝吉(Chris Bergey)说:“在功率效率或性能之间再也没有选择;我们希望您同时选择同时选择。”

该公司还推出了V1核心,该核心承诺每线CPU核心的每线最佳性能。ARM说,根据其宙斯微体系结构和V系列CPU内核家族的一部分,V1核心在同一过程节点和时钟速度下运行的速度比N1快50%,在超级计算机,机器学习,机器学习,机器学习,机器学习,机器学习方面的速度更高和其他领域。

但是有了N2核心,该公司正处于数据中心服务器市场的核心。ARM说,N2 Core专注于具有大量CPU内核的服务器处理器,从而使其从云数据中心到网络边缘的通用性能更具通用性能。Bergey说:“ N2 Core的功率效率轮廓使其在每个插座的线程上具有竞争力,同时提供专用核心而不是线程。”Fig. 1).

Armupdated its server CPU roadmap with the V1 and N2 coreslast year.

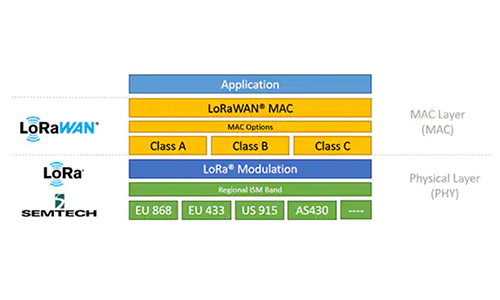

The N2 core is also the first in its family of server processors using Arm's v9 architecture that brings with it major improvements in performance, power efficiency, and security. The CPU also supports its SVE2 technology, giving it more vector processing performance for AI, DSP, and 5G workloads (Fig. 2). The 64-bit CPU core includes dual 128-bit SVE processing pipelines. Upgrading to the 5-nm node opens the door for a 10% boost in clock frequency.

The N2 CPU core can also be equipped with DDR5 and HBM memory interfaces and other industry-standard protocols, including CCIX, CXL, and PCIe Gen 5, to attach accelerators.

Arm licenses the blueprints to its chips to Qualcomm, Apple, and other companies that use them to develop chips better suited to their demands. The power efficiency of its cores has transformed it into the gold standard in smartphones, and it is gaining ground in personal computers with Apple's M1. Arm is also trying to apply the principles of performance per watt and flexibility to data centers with its Neoverse CPU family.

While Arm does not compete directly with Intel or AMD, it is trying to convince technology giants—the Amazons, Microsofts, and Googles of the world—to licenses its blueprints and use them to create server-grade processors that can replace Intel's Xeon Scalable CPUs in the cloud. Arm is also rolling out its semiconductor templates to startups, such as Ampere Computing, aiming to take the performance crown from AMD's EPYC chips.

Arm said the N2 also stands out for being scalable (Fig. 3). Its customers can use the N2 core in server-grade chips in networking gear in data centers and 5G base stations, with up to 36 cores consuming up to 80 W. The N2 is also suited for server processors in cloud data centers that contain up to 192 cores clocked at faster speeds and devouring up to 350 W.

伯吉说:“我们显示出卓越的人均吞吐量,这对于来自系统的云和超大运营商以及总拥有成本[TCO]的角度非常重要,”如果您是从云服务提供商那里运行VMS [虚拟机]的客户,这很重要。”

With the N2 core, Arm is trying to expand on its wins with the N1 introduced in 2019, which serves as the template for most Arm server chips on the market, most famously Amazon's.

Amazon Web Services, the world's largest cloud service provider, has started renting out access to servers on its cloud, running on its internally designed Graviton2 CPU, a 64-core monolithic processor design based on the N1 core. The company said that it delivers 40% more performance over Intel CPUs, depending on the exact workload, at a 20% lower cost.

One of Arm's other silicon partners is Ampere, which also takes advantage of the N1 CPU core in its Altra series of server processors that scale up to 128 single-threaded cores per die, the most of any merchant server processor on the market. Last year, the Silicon Valley startup unveiled its 80-core Altra CPU, which TSMC fabs on the 7-nanometer process node.

Arm said that software giant Oracle would deploy Ampere’s Altra CPUs in its growing cloud computing business. Alibaba, one of the leading cloud computing vendors in China, is also prepping to roll out Arm-based cloud services. Arm said the N1 CPU architecture is used by four of the world's seven largest so-called hyperscale data center operators, counting AWS.

Arm said the N2 could also serve as a building block for server-class networking chips in data centers and 5G base stations. Marvell Technology revealed its new Octeon family of DPUs would use the N2 and said it plans to supply the first samples by the end of the year. Marvell said the chips would offer three times the performance of its previous generation.

卑尔根说:“这是冰山的正当尖端。

ARM说,新的N2核心均具有整体的微观结构改进。该公司并没有以任何代价(V1的理念)推动绩效限制,而是对N2的进步增加了,可以在功率效率和区域方面为自己支付。每个功能旨在保留N2的“平衡性质”(Fig. 4)

While Arm enlarged the processing pipeline in the V1 core to accommodate many more instructions in flight, Arm said that it tapered the pipeline in the N2 to prevent penalties to power efficiency and die area. The company said that it is also reduced its dependence on speculation, where the CPU performs computations out of order to speed up performance.

但是N2核心保留了V1的其他微体系结构改进,包括在CPU核心的前端进行了改进的分支预测,数据提取和缓存管理。Arm说,它将所有这些改进的占地面积比V1核心小25%,因此ARM的硅合作伙伴可以将更多的合作伙伴捆绑在一个模具上。

The CPU core also incorporates the same micro-operation cache as the V1 core to keep it pre-loaded with instructions to run. The cache results in major performance gains on small kernels frequently used in infrastructure workloads.

Another area of focus was in the power management sphere. Dispatch throttling (DT) is used to throttle features as necessary to keep the CPU from overstepping the operator's desired power budget. Its maximum power mitigation mechanism (MPMM) smooths out the power consumed by the N2 to sustain clock frequencies. Power efficiency is a major priority in cloud data centers, which now account for around 1% of global electricity use.

A unique power-saving feature is performance-defined power management or PDPM. This is used to scale the microarchitecture to bolster the power efficiency of the CPU, depending on its workload. The feature works by adapting the width, depth, or speculation of the N2 to match the microarchitecture to its current workload. In turn, that improves power efficiency.

为了可伸缩性,它使用内存分配和监视(MPAM)来防止在CPU核心中运行的任何单个进程hogging System Cache或其他共享资源。

Based on internal performance tests, Arm said a 64-core N1 processor already has more performance per thread than Intel's Cascade Lake Xeon Scalable CPUs and AMD's Rome EPYC server processors that contain up to 28 cores and 64 cores, respectively. But with a 128-core N2 server processor, Arm said these performance gains would increase sharply.

But the company is going to have a tough time gaining ground on rivals. Intel rolled out its new generation of Xeon Scalable server chips last month, codenamed Ice Lake, loading up to 40 X86 cores based on its Sunny Cove architecture, bolstered by its 10-nanometer node. Intel is struggling to defend its 90% market share in data center chips from AMD, which is pressuring it with its new Epyc Milan server CPUs that lash together up to 64 Zen 3 cores.

伯吉说:“我们的竞争对手并没有静止。”“但是,我们相信我们的每核表现将与您所期望的传统建筑所期望的一切相吻合。”

Along with its CPU improvements, it also announced a more advanced mesh interconnect to arrange the cores on a processor die, serving as connective tissue inside future server chips based on Arm's blueprints. Arm said the coherent mesh network interconnect, the CMN-700, brings "a step function increase” in performance than the existing CMN-600.

Arm is trying to give its partners more ways to customize the CPU, surround it with on-die accelerators and other intellectual property (IP) in a system-on-a-chip (SoC), or supplement it with memory, accelerators, or other server appendages to wring out more performance at the system level. “We will help you deliver solutions, heterogeneously or homogeneously, on a single die or within a multi-chip package, whatever is best for the use case," Bergey said.

The interconnect also supports PCIe Gen 5 to connect accelerators to the server to unload artificial intelligence and other workloads taxing for the CPU, doubling the rate of PCIe Gen 4 ports in the process. Arm also upgraded from DDR4 to the DDR5 DRAM interface. The IP also brings HBM3 for high-bandwidth memory into the fold.

Also new is support for the second-generation compute express link (CXL), which uses the PCIe Gen 5 protocol to anchor larger pools of memory or accelerators to the server with cache coherency. CXL gives the CPU and accelerators "coherent" access to each other’s memory, giving a performance boost to AI and other workloads.

它还包括基于加速器或CCIX标准的高速缓存相干互连的端口。ARM说,虽然它将使用CXL将加速器和内存链接起来,但它将使用CCIX作为插座内的主要CPU-CPU互连。它还可以满足系统中的包装(SIP)或其他由较小的分解模具(chiplets)组装而成的芯片。Bergey说,CCIX兼具是二线互连。

他说:“数据中心工作量和互联网流量的需求正在成倍增长,”他补充说:“传统的一定程度的计算方法不是答案。”他说,其客户群希望“灵活性和自由)为正确的应用程序实现正确的计算级别。”

"As Moore’s Law comes to an end, solutions providers are seeking specialized processing," he said.